HDInsight HBase is offered as a managed cluster that is integrated into the Azure environment. The clusters are configured to store data directly in Azure Storage, which provides low latency and increased elasticity in performance and cost choices. This property enables customers to build interactive websites that work with large datasets. To build services that store sensor and telemetry data from millions of end points. And to analyze this data with Hadoop jobs. HBase and Hadoop are good starting points for big data project in Azure. The services can enable real-time applications to work with large datasets. In this article we will see the approach to Migrate on Premise HBase to HDInsight HBase.

Please follow the below documentation for Best Practice and the Benefit for Migrating to Azure HDInsight.

https://docs.microsoft.com/en-us/azure/hdinsight/hadoop/apache-hadoop-on-premises-migration-motivation

Use Case: In this example we will demonstrate the two approach for Migration.

Copy the hbase folder: With this approach, you copy all HBase data, without being able to select a subset of tables or column families. Subsequent approaches provide greater control. HBase uses the default storage selected when creating the cluster. HBase stores its data and metadata files under the following path: /hbase. In this approach there is more downtime based on data size.

Snapshot: This enable you to take a point-in-time backup of data in your HBase datastore. Snapshots have minimal overhead and complete within seconds, because a snapshot operation is effectively a metadata operation capturing the names of all files in storage at that instant. At the time of a snapshot, no actual data is copied. Snapshots rely on the immutable nature of the data stored in HDFS, where updates, deletes, and inserts are all represented as new data. You can restore (clone) a snapshot on the same cluster, or export a snapshot to another cluster. This can be done table by table but the advantage is of less downtime.

Incremental Load: is common for both the approach

Lets run this step by step.

Option 1: Using Copying hbase folder approach

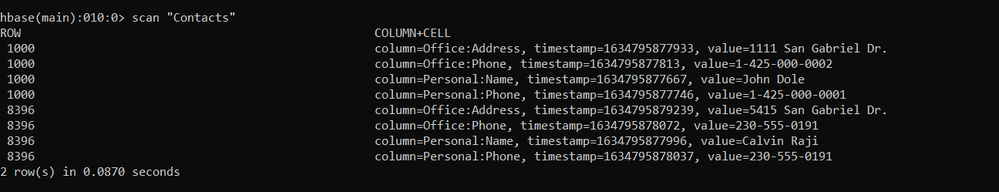

Step 1: Create Table in Source Cluster and insert some data

Step 2: Note down the timestamp before stopping the HBase service from Ambari and taking the backup.

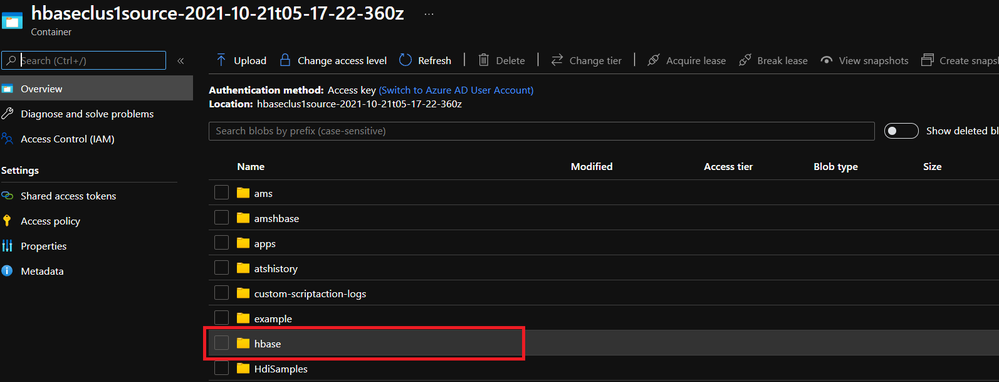

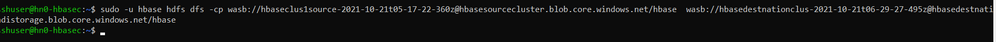

Step 3: Copy the HBase Folder from source to Destination cluster

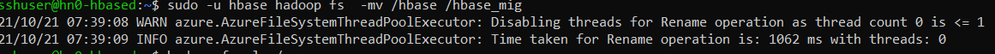

Step 4: Rename the HBase directory to hbase_mig in destination cluster

Step 5: Copy the Source HBase Data folder to destination HBase Data Folder

Note: Please find the link for copying the data as there are multiple option based upon the business requirement. Here we have used Hadoop copy command.

Data migration: On-premises Apache Hadoop to Azure HDInsight | Microsoft Docs

Step 6: Once the Data is copied Restart the HBase Services From Ambari in destination Cluster.

Step 7: Verify the destination Cluster by listing the table and scanning the table.

There may be scenario which takes time to copy the data in that case your on premise cluster still receives some data. Please refer to Incremental load option as mentioned in below document in incremental load section.

Option 2: Using Snapshot Based Approach

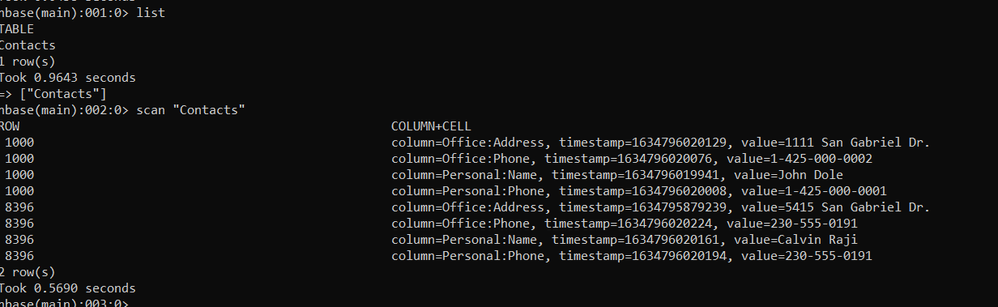

Step 1: Create Table in Source Cluster and insert some data

Step 2: Flush the table

Step 3: Disable the Table to Stop Writing

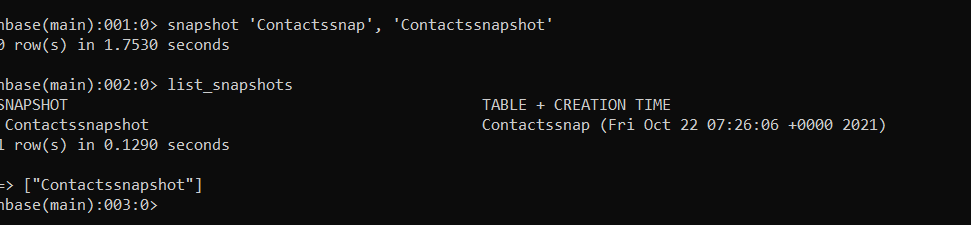

Step 4: Take a Snapshot

Step 5: Note down the Timestamp

Step 6: Enable Table

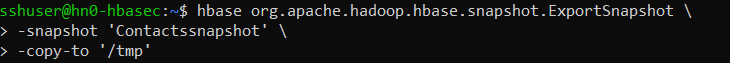

Step 7: Export the Snapshot to destination Storage of HBase cluster to /hbase folder

Step 7.1: Export the snapshot to the Same Storage or Databox in case you are using Data Box to copy the data.(Data migration: On-premises Apache Hadoop to Azure HDInsight | Microsoft Docs)

Step 7.2 Copy the Snapshot to the /hbase directory of the destination cluster from the Databox.

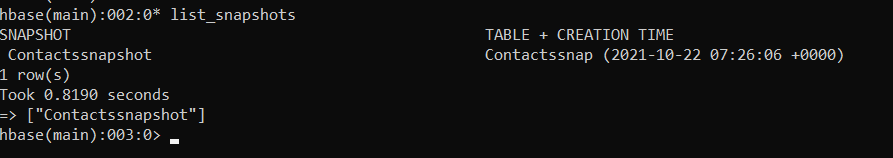

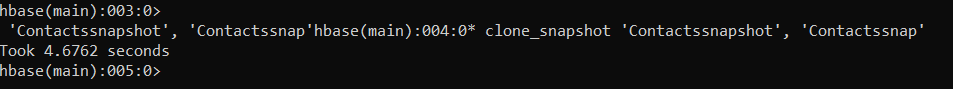

Step 8: Import the Snapshot in Destination Cluster and verify the snapshot is exported.

Step 9: Clone the snapshot.

Step 10: Enable the table

Incremental Load in HBase

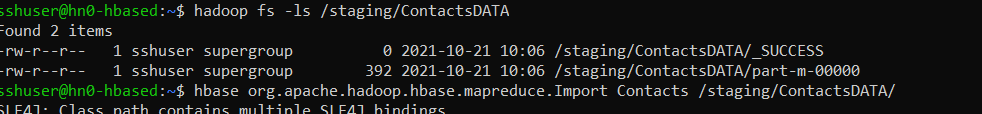

Step1: Load some incremental data in source cluster

Step2: Note down the End Time and run the export command to export to local directory with start and end time.

Step 3: Distcp command or Azure ADF to copy the data.

Step 4: Import command in Destination to import the data.

Step 5: Verify the data

Posted at https://sl.advdat.com/3q9mYZc