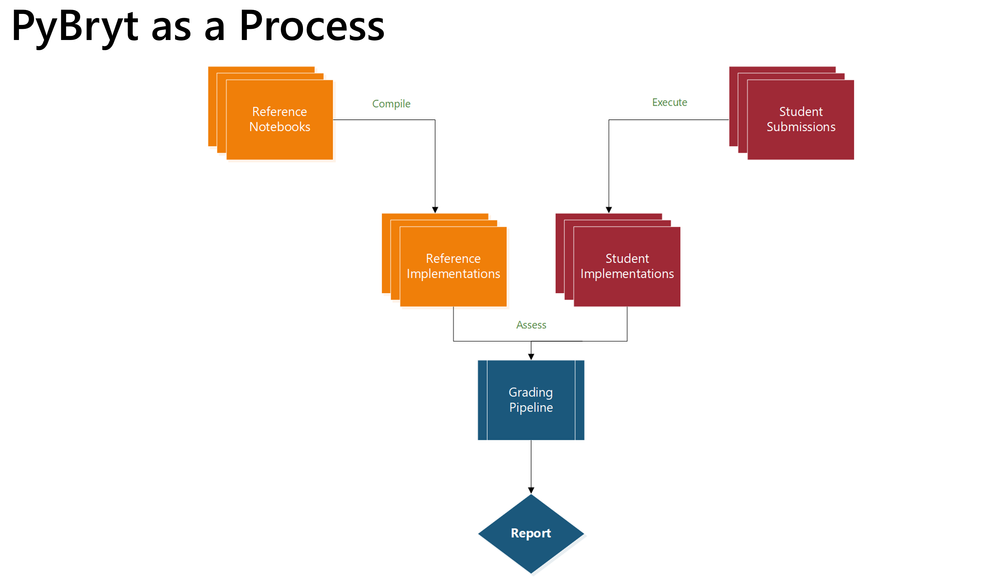

PyBryt is an open-source Python library that aims to help the process of providing specific feedback to students without the need of instructor intervention while the student works through an assignment. PyBryt is an auto-assessment framework that examines a student's solution to some problem to determine its correctness and provide targeted messages about specific implementation details by comparing it to one or more reference implementations provided by the instructor. In this way, students can get the same helpful hints and pointers automatically rather than needing to meet with an instructor.

One of the most difficult problems that is faced by instructors is designing assignments and providing learners with feedback that will guide them toward the solution. This problem is exacerbated in computer science curricula, in which assignments can generate cryptic error messages or give the appearance of success without throwing any errors. The nature of assignments in computer science redoubles the need for targeted instructor feedback and assistance in debugging implementations.

This formulation also has another important benefit: it allows instructors to create more open-form assignments that traditional autograders would allow.

Traditional unit test-based autograders rely on students create rigidly structured objects or a specific API in their submission, which limits the students' ability to structure their solutions in unique ways. Often, one of the most time-consuming processes of grading with an autograder is to help students debug why their specific solution didn't pass the autograder tests even though the implementation is nominally correct.

PyBryt: auto-assessment and auto-grading for computational thinking

We continuously interact with computerized systems to achieve goals and perform tasks in our personal and professional lives. Therefore, the ability to program such systems is a skill needed by everyone. Consequently, computational thinking skills are essential for everyone, which creates a challenge for the educational system to teach these skills at scale and allow students to practice these skills. To address this challenge, we present a novel approach to providing formative feedback to students on programming assignments. Our approach uses dynamic evaluation to trace intermediate results generated by student's code and compares them to the reference implementation provided by their teachers. We have implemented this method as a Python library and demonstrate its use to give students relevant feedback on their work while allowing teachers to challenge their students' computational thinking skills. Paper available at [2112.02144] PyBryt: auto-assessment and auto-grading for computational thinking (arxiv.org)

With PyBryt's ability to compare student implementations against multiple references, instructors can create assignments with libraries of reference implementations that are robust to the different ways students develop of solving the same problem.

Microsoft Learn

Learn how to use PyBryt, an open-source auto-assessment tool, to create assignments with targeted feedback and generalizable grading.

Learning objectives

In this module you will:

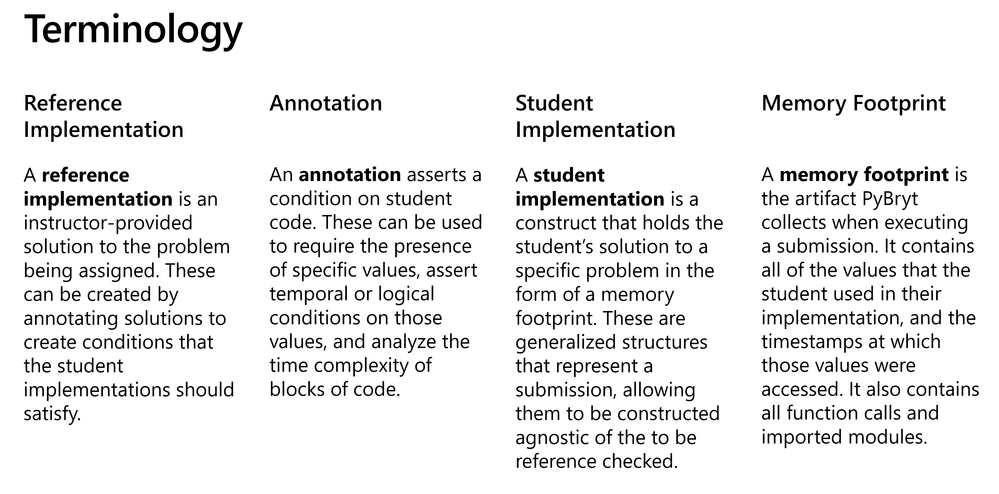

- Understand the concept of annotations in context of reference implementations

- Combine annotations into reference implementations

- Explore how combining reference implementations can support different kinds of solutions

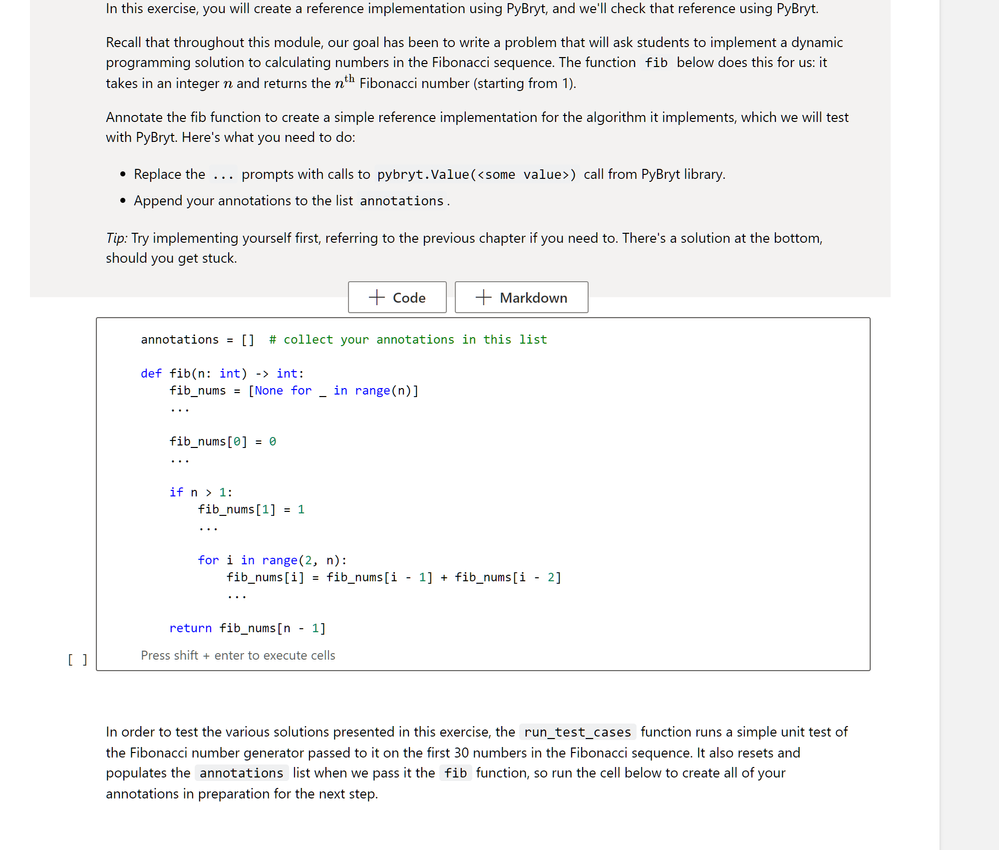

- Explore the process of creating a Fibonacci sequence assignment using PyBryt

Educators and Institutions can leverage the PyBryt Library to integrate auto assessment and reference models to hands on labs and assessments.

- Educators do not have to enforce the structure of the solution;

- Learner practice the design process, code design and implemented solution;

- Meaningful & pedagogical feedback to the learners;

- Analysis of complexity within the learners solution;

- Plagiarism detection and support for reference solutions;

- Easy integration into existing organizational or institutional grading infrastructure.