We are excited to announce that Microsoft Defender for Key Vault has moved the back-end processing infrastructure to Azure Synapse Analytics. The benefits and advantages of deploying services on Azure Synapse Analytics include but not limited to:

- It provides better compliance and security control.

- It has built-in support for .NET for Spark applications.

- It has built-in Azure Data Factory to orchestrate workflows and its engine support 90+ data sources.

- It provides integration with Microsoft Power BI, Azure Machine Learning, Azure Cosmos DB, Azure Data Explorer.

- It has both Spark Engine and SQL Engine.

This article is going to talk about the changes we made to move our infrastructure to Azure Synapse Analytics.

Overview

Microsoft Defender for Key Vault is a good example of a service to run on Azure Synapse Analytics as it is an end-to-end ML-based big data analytics service. This effort covers many key points, including Streaming jobs, Machine Learning development, .NET Spark jobs, and more.

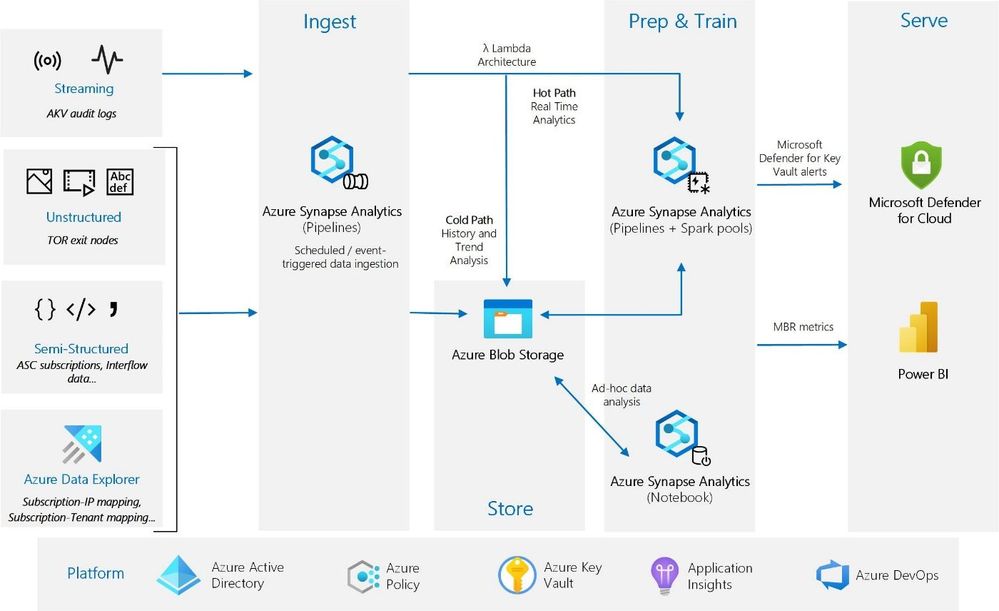

As a modern data infrastructure, we made respective changes for each part to achieve the final architecture based on Azure Synapse Analytics as the following image.

- Use Azure Synapse Analytics Pipelines to create, schedule, and orchestrate data processing and transformation workflows.

- Use Azure Synapse Analytics Spark pools to ingest raw streaming data by running micro-batch processing jobs to implement the "Hot Path" of the Lambda architecture pattern and derive insights from the stream data in transit.

- Use Azure Synapse Analytics Spark pools to perform historical and trend analysis on the "Cold Path" of the Lambda architecture pattern.

- Azure Synapse Analytics provides notebooks for ad-hoc jobs and exploratory data analysis.

- Raw structured, semi-structured, and unstructured data are ingested into Azure Data Lake Storage Gen2 from various sources. Azure Data Lake Storage Gen2 also stores resulting datasets such as aggregated data, features, models, and alerts.

- Use Azure services for collaboration, performance, reliability, governance, and security:

- Azure DevOps offers continuous integration and continuous deployment (CI/CD) and other integrated version control features.

- Azure Key Vault securely manages secrets, keys, and certificates.

- Azure Application Insights collects and monitors custom application metrics, streaming query events, and application log messages.

- Azure Active Directory (Azure AD) provides single sign-on (SSO) for Azure users.

Those changes include source code, deployment, and interactions with other Azure services. Rather than lift and shift or refactoring the whole service, we chose re-platforming as our final migration approach.

Key Learnings

In this section, I will go over the following key learnings from our migration in detail:

- Microsoft Spark Utilities

- Secrets Management

- Storage Management

- Package & Library Management

- Migrate Azure Data Factory V2 to Azure Synapse Analytics

- Support for .NET Spark Job

- Support for Streaming Job

- Support for Machine Learning

- Workspace Managed Identity

- Continuous Integration and Continuous Delivery

- Disaster Recovery

Microsoft Spark Utilities

Azure Synapse Analytics provides a built-in package called Microsoft Spark Utilities on top of open-source Spark. It comprises functions to manage secrets, file systems, notebooks, secrets, etc. It is available in Azure Synapse Analytics Notebooks and Pipelines. We used these utilities to manage secrets and file systems after creating a link to an Azure Key Vault and storage account respectively. Details on how to link these services will be provided later in this article.

Secrets Utility:

- MSSparkUtils provides credentials utilities (mssparkutils.credentials) to manage secrets in Azure Key Vault. It can also be used to get the access token of linked services and update Azure Key Vault secrets.

File system Utility:

- Azure Synapse Analytics also has file system utilities – mssparkutils.fs. It provides utilities for working with various file systems, including Azure Data Lake Storage Gen2 and Azure Blob Storage.

For the details of other utilities, see the official documents of Azure Synapse Analytics.

Secrets Management

On Azure, we are using Azure Key Vault to store and manage secrets. So far, you have learned how to use secret utility to access or store secrets in Azure Key Vault, but how do we configure access to Azure Key Vault in the first place?

On Azure Synapse Analytics, we can add an Azure Key Vault account as a linked service on an Azure Synapse Studio. Then grant secret access for this Azure Synapse Workspace managed identity and your Azure AD by adding an access policy on the Azure Key Vault. Please note, Azure Synapse Analytics doesn’t support secret redaction yet to prevent from accidentally printing a secret to standard output buffers or displaying the value during the variable assignment, as of December 2021.

Storage Management

You have learned how to configure access to an Azure Key Vault. It is time to configure access to an Azure Data Lake Storage Gen2 account. All we need to do is to add the Azure Synapse Workspace managed identity and your Azure AD with Storage Blob Data Contributor role on this Azure Data Lake Storage Gen2 account. Then you can access data on this Azure Data Lake Storage Gen2 either by Azure Synapse Analytics pipelines or notebooks via the following URL:

abfss://<container_name>@<storage_account_name>.dfs.core.windows.net/<path>

Package & Library Management

By default, Apache Spark in Azure Synapse Analytics has a full set of libraries for common data engineering, data preparation, machine learning, and data visualization tasks. When a Spark instance starts up, these libraries will automatically be included.

Often, you may want to use custom or private wheel or jar files. You can upload these files to your workspace package and later add these packages to specific Apache Spark pools in Azure Synapse Analytics. Those wheel or jar files are shared at the workspace level, instead of the pool level. So, we must name the wheel file differently every time we upload a new one.

In some cases, you may want to install external libraries on top of the base runtime. For Python, you can provide a requirements.txt or environment.yml to specific Apache Spark pools to install packages from repositories like PyPI, Conda-Forge. For other languages, it is not supported yet (as of December 2021). But as a workaround, you can download the external libraries first, and then upload them as your workspace packages.

A system reserved Spark job is initiated each time an Apache Spark pool in Azure Synapse Analytics is updated with a new set of libraries and can be used to monitor the status of the library installation. When you update the libraries of the Apache Spark pool in Azure Synapse Analytics, these changes will be picked up once the pool has restarted. If you have active jobs, these jobs will continue to run on the original version of the Apache Spark pool. You can force the changes to apply by selecting the Force new settings, which will end all current running and queued Spark applications for the selected Apache Spark pool. Once those are ended, the pool will be restarted and apply the new changes. For every deployment, we create a new Apache Spark pool and deploy all changes to this new pool to avoid the disruptive process. Once existing running jobs end, new jobs will start running on a new pool with new libraries.

Migrate Azure Data Factory V2 to Azure Synapse Analytics

On Azure Synapse Analytics, the data integration capabilities such as Azure Synapse Analytics pipelines and data flows are based upon those of Azure Data Factory V2. This document discusses the differences between Azure Synapse Analytics and Azure Data Factory V2. Azure provides a PowerShell script to migrate Azure Data Factory V2 to Azure Synapse Analytics. https://github.com/Azure-Samples/Synapse/tree/main/Pipelines/ImportADFtoSynapse

Support for .NET Spark job

Azure Synapse Analytics provides equivalent development experience for PySpark, Scala Spark, and .NET Spark. We only need the following two steps to run a .NET Spark job on Azure Synapse Analytics:

- Upload the ZIP file containing your .NET Spark application to an Azure Data Lake Storage Gen2 account linked to Azure Synapse Studio.

- Create an Apache Spark job definition by selecting the language as .NET Spark and filling in the main definition file with the ZIP file path.

Here are the tutorials for submitting an Apache Spark job of those three languages in Azure Synapse Studio.

Support for Streaming job

The full support for streaming jobs that run perpetually is on the roadmap of Azure Synapse Analytics. As of December 2021, Azure Synapse Analytics jobs are limited to 7 days. The current workaround we have is to run micro-batch processing jobs to ingest raw streaming data and restart the job every 4 hours.

Support for Machine Learning

As mentioned before, Apache Spark pools in Azure Synapse Analytics use runtimes to tie together essential component versions, and Azure Synapse Runtime has a full set of libraries including Apache Spark MLlib, Scikit Learn, NumPy for common data engineering, data preparation, machine learning, and data visualization tasks. Moreover, Azure Machine Learning is seamlessly integrated with Azure Synapse Notebooks, and users can easily leverage automated ML in Azure Synapse Analytics with passthrough Azure Active Directory authentication.

Workspace Managed Identity

A common challenge for developers is the management of secrets and credentials used to secure communication between different components making up a solution. Managed identities eliminate the need for developers to manage credentials. System-assigned managed identities provide an identity for the service to use when connecting to resources that support Azure Active Directory (Azure AD) authentication by Azure AD tokens.

We can authenticate Azure Synapse Workspace to access Azure Data Lake Storage Gen2 or Azure Key Vault via the workspace managed identity which is created along with the Azure Synapse Workspace.

Continuous Integration and Continuous Delivery (CICD)

Microsoft Defender for Key Vault uses Azure Repos and Azure Pipelines to produce deployable artifacts for continuous integration and release new versions for continuous deployments. Azure Resource Manager (ARM) template and custom script extensions enable us to deploy our service to Azure in stages and automatically. ARM templates are used to define the resources that are needed (e.g., Azure storage account) for the service and specify deployment parameters to input values for different environments. When some actions are not supported directly by the ARM template, we are using custom script extensions to execute user-defined actions. The following table is the ARM template support for Azure Synapse Analytics (as of December 2021).

|

|

Azure Synapse Analytics |

|

Compute Clusters |

ARM template |

|

Network Configuration |

ARM template |

|

Workspace Encryption Settings |

ARM template |

|

Workspace RBAC |

Extension |

|

Azure Data Factory V2/Azure Synapse Studio |

Extension |

|

Notebook |

Extension |

|

Managed Identity |

ARM template |

Microsoft Azure .NET SDK provides Azure Synapse Analytics development client library for programmatically managing artifacts, offering methods to create, update, list, and delete pipelines, datasets, data flows, notebooks, Spark job definitions, SQL scripts, linked services, and triggers.

Disaster Recovery

Business Continuity and Disaster Recovery (BCDR) is the strategy that determines how applications, workloads, and data remain available during planned and unplanned downtime. Azure Synapse Analytics only supports disaster recovery for dedicated SQL pools and doesn’t support it for Apache Spark pools and Azure Synapse Studio yet (as of December 2021). Azure Synapse Analytics uses data warehouse snapshots for disaster recovery of dedicated SQL pools. It creates a restore point you can leverage to recover or copy your data warehouse to a previous state. In the event of a disaster, we can create a new Azure Synapse Analytics environment and then deploy our service on it using Azure pipelines.

Conclusion

In this article, we have covered the architecture change of Microsoft Defender for Key Vault to deploy to Azure Synapse Analytics. We have also taken a deep dive into migration and shared our key learnings from it.

References

https://docs.microsoft.com/en-us/azure/synapse-analytics/

Posted at https://sl.advdat.com/3BMhebRhttps://sl.advdat.com/3BMhebR