Today Microsoft Sentinel provides different capabilities and tools that enable security analysts and data scientists to generate security insights via big data analytics. At Microsoft Ignite 2021, we announced the Public Preview of Microsoft Sentinel Notebooks integration with Azure Synapse. In addition to the general Notebooks capabilities in threat hunting and investigation, the integration provides a new SecOps pipeline with extra features for big data analysis, built-in data lake access, and the Apache Spark distributed processing engine.

To work with Microsoft Sentinel datasets, you will need a continuous data pipeline to export logs from your Sentinel workspace to a data lake or blob storage. We are happy to announce the general availability of the data export feature that enables this continuous data pipeline! If you’ve collected custom logs that you want to export and use in the analysis, Log Analytics now also supports export of new generation of custom logs (aka CLv2) in addition to the currently supported tables.

How to configure a continuous data export pipeline

There are several ways to configure a continuous data export pipeline. Here we highlight a couple of methods commonly used by our Microsoft Notebooks users. Check out the documentation for a more complete list.

- Via Log Analytics workspace UI

Follow the instructions to create a new export rule via the Log Analytics workspace UI.

- Via pre-built Microsoft Sentinel notebook

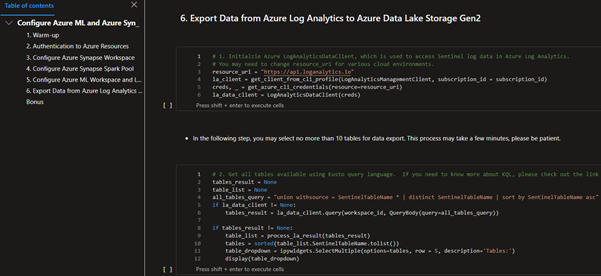

Additionally, we provide a pre-built notebook that enables a one-time configuration for the Azure Synapse integration. The notebook template can be cloned and launched directly from Microsoft Sentinel to an Azure ML environment. The notebook provides a script that allows you to create an export rule. Select the Microsoft Sentinel tables you want to export and specify an Azure Data Lake destination.

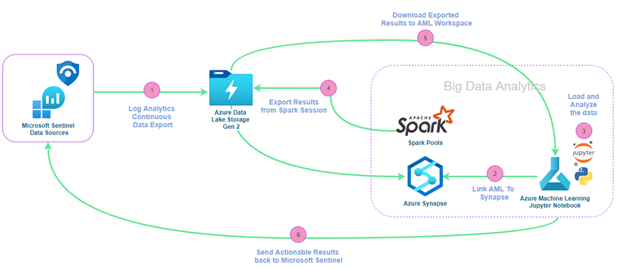

In general, the data pipeline and analysis architecture on our notebooks for the Synapse integration looks like the following image. Check out our sample notebook that detects potential network beaconing on Synapse Spark pools for more details.

Try out the data export with the Notebooks integration with Azure Synapse to create your own big data analytics and let us know if you have any feedback.

Posted at https://sl.advdat.com/3vsxb5Mhttps://sl.advdat.com/3vsxb5M