Why migrate on-premises big data workloads to Azure?

There are many reasons why customers consider migrating their existing on-premises big data workloads to Azure.

- Cost of ownership - Running a cluster of computers in an on-premises data center requires a considerable administration effort, as well as consuming significant capital expenditure on hardware. Day-to-day running costs involved in maintaining a cool and stable environment for high-powered computing resources can also be a significant factor.

- Uncertainty in on-premises ecosystem - In the past few years, Hadoop on Cloud has significantly helped shape the on-premises Hadoop ecosystem. Mergers, bankruptcies and license expirations have caused a sense of tremendous uncertainty amongst customers on the viability of continuing with their on-premises offerings.

- Performance and autoscaling - An on-premises system is a static one-size-fits-all solution. Scaling is a timeconsuming, manual operation that involves a complex array of tasks. It's not easy to add resources to a live on-premises cluster.

- Better VM types - Your on-premises solution might be restricted by the level of hardware available to support the virtual machines necessary to host evolving workloads.

- HA/DR - High availability and disaster recovery is a major headache for many on-premises systems, requiring that you have built-in redundancy, and well-rehearsed plans for restoring full functionality.

- Compliance - In a large-scale commercial system, you may be legally liable for maintaining the appropriate records and audit trails, and ensuring security.

- End of support - Your existing system might be running on end-of-life software that is no longer supported. To ensure stability, you will be required to transition to a newer release

What is Enabling Hadoop Migrations on Azure ( EHMA ) ?

EHMA - Enabling quicker, easier and efficient Hadoop migrations, hence making Azure as the preferred cloud while migrating Hadoop workloads.

Many customers with On-prem Hadoop are facing extensive technical blockers be it for designing their On-cloud architectures or migrating it. Assessment of On-prem Hadoop infrastructure with the help of pre-built scripts and questionnaire will set off to a better planned migration and clear roadblocks in the early phases.

- Prescriptive architecture as a starting point with room to customise - End state architectures are individually curated for each Hadoop Stack component on Azure for IaaS and PaaS, respectively.

- Documented Prescriptive Guides - Provide the field team a guide to drive Hadoop migrations, deployment of base architectures on Azure to speed up the migration process.

- Deployment Templates - Deployment of architectures on Azure are supported with the help of Bicep templates. Templates that can launch a configured, ready to use Infrastructure on Azure for IaaS and PaaS with all dependent Azure services included.

- Comprehensive guidance - The comprehensive focuses on specific guidance and considerations you can follow to help move your existing Hadoop Infrastructure to Azure

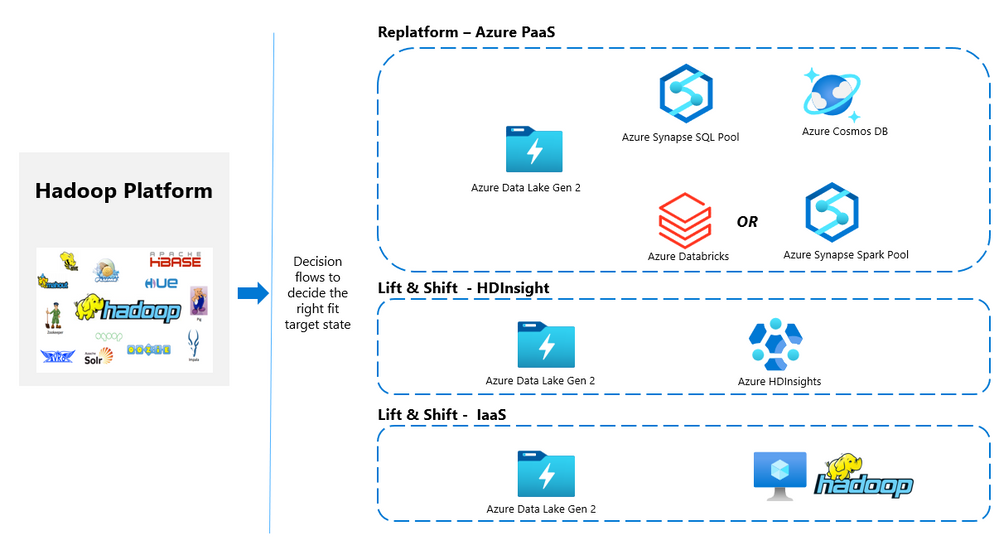

- Decision flows - In order to choose the best landing target, the comprehensive decisions tree helps navigating to the best available option according to the requirements.

Hadoop components migration approach

EHMA focuses on specific guidance and considerations you can follow to help move your existing platform/infrastructure -- On-Premises and Other Cloud to Azure. EHMA covers the following Hadoop ecosystem:

| Component | Description | Decision Flow/Flowchats |

|---|---|---|

| Apache HDFS | Distributed File System | Planning the data migration , Pre-checks prior to data migration |

| Apache HBase | Column-oriented table service | Choosing landing target for Apache HBase , Choosing storage for Apache HBase on Azure |

| Apache Hive | Datawarehouse infrastructure | Choosing landing target for Hive, Selecting target DB for hive metadata |

| Apache Spark | Data processing Framework | Choosing landing target for Apache Spark on Azure |

| Apache Ranger | Frame work to monitor and manage Data secuirty | |

| Apache Sentry | Frame work to monitor and manage Data secuirty | Choosing landing Targets for Apache Sentry on Azure |

| Apache MapReduce | Distributed computation framework | |

| Apache Zookeeper | Distributed coordination service | |

| Apache YARN | Resource manager for Hadoop ecosystem | |

| Apache Storm | Distributed real-time computing system | Choosing landing targets for Apache Storm on Azure |

| Apache Sqoop | Command line interface tool for transferring data between Apache Hadoop clusters and relational databases | Choosing landing targets for Apache Sqoop on Azure |

| Apache Kafka | Highly scalable fault tolerant distributed messaging system | Choosing landing targets for Apache Kafka on Azure |

| Apache Atlas | Open source framework for data governance and Metadata Management |

End State Reference Architecture

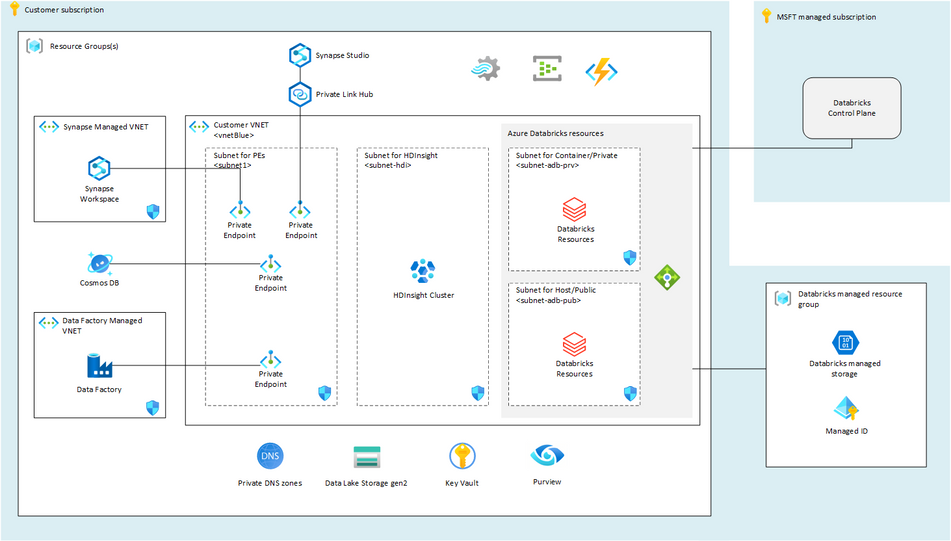

One of the challenges while migrating workloads from on-premises Hadoop to Azure is having the right deployment done which is aligning with the desired end state architecture and the application.

The Bicep deployment template(Reference Architecture Deployment ) aims to reduce a significant effort which goes behind deploying the PaaS services on Azure as below and having a production ready architecture up and running.

The above diagram depicts the end state architecture for big data workloads on Azure PaaS listing all the components deployed as a part of bicep template deployment. With Bicep we also have an additional advantage of deploying only the modules we prefer for a customised architecture.

Posted at https://sl.advdat.com/3sQjGeehttps://sl.advdat.com/3sQjGee