Continuing the topic of data space configuration discussed in how-to discover database files space configurations on Azure SQL Managed Instance, in this post we shall focus on autogrowth aspect of the database data & log files, how to measure it and how to manage it.

If you are interested in other posts on how-to discover different aspects of SQL MI - please visit the http://aka.ms/sqlmi-howto, which serves as a placeholder for the series.

The importance of managing auto-growth for data and log files can not be overstated, if configured incorrectly it can make your Azure SQL Managed Instance perform incredibly slow, eventually bringing the system to a state which might appear a standstill to the customer. Countless posts have been written on how to configure and size the auto-growth correctly for SQL Server, but the very same aspect seems to be less understood and much less applied for Azure SQL Managed Instance.

In this post we shall dive into some of the aspects which govern the autogrowth impact on the database files, helping customers to understand why disk performance is configured it is, why customers are in control and how they can achieve the best disk performance with Azure SQL Managed Instance.

First, we need to start with space reservation and allocation for Azure SQL Managed Instance. Because there is a price associated with the disk space reservation and because the detailed allocation of the space is entirely up to the concrete customer wants and needs – Microsoft cannot take those decisions for the customer. Secondly, for the General Purpose service tier, the precise size of any database file directly affect its performance, the decision to change and apply different sizes to the database files lies in the hands of the customer.

Talking about database file management will require us to touch on the subject of Instant File Initialization, also known as IFI and its status of support on Azure SQL Managed Instance.

IFI – Instant File Initialization

You might be surprised with this revelation, but out of the box, Azure SQL Managed Instance does not support Instant File Initialization. IFI is an amazing feature, which allows speeding up growth for your SQL Server database files by avoiding zeroing the files (filling with zeros) when you perform different operations such as creation of the database, restore of a database or a filegroup or by increasing the size of an existing file (including autogrow operations).

By default and recommended feature of Azure SQL Managed Instance is to use Transparent Data Encryption (TDE), which disables the advantage of the Instant File Initialization and no matter if you are using General Purpose or Business Critical service tiers, your data file operations with TDE will require writing zeroes onto the allocated test, thus removing all potential advantages of Instant File Initialization.

Additionally, [tempdb] system database is encrypted the moment any other database on the same SQL Server instance is encrypted by using TDE. This means that because other system databases, such as master and msdb are encrypted out of the box, [tempdb] right from the start is encrypted.

We highly recommend customers not to remove TDE from their user databases and if they need to exercise more precise control over the encryption, they can always use BYOK (Bring Your Own Key) and integrate it with Azure Key Vault (AKV).

Sizing and Autogrow for your database files

Selecting the right initial size will depend on several factors. Ideally, the growth should be managed by the administrator to avoid painful delays during the important production times. Customer can always resize their database files at the convenient moment, or even better program those occurrences during the maintenance times of their databases, with the help of SQL Agent, for example.

Choosing the right settings for the autogrow has a lot different considerations and for the purpose of keeping them all in one place, please consult Considerations for Autogrow in our documentation. One key thing that definitely affects failover times is the number of Virtual Log Files (VLFs) that will result from the uncontrolled and frequent growth of the log file.

Performance Test

Let’s do a rather simple performance test against 2 similar databases and let’s measure the precise impact of the time that the storage engine is spending on autogrowing database files.

In the script below I will create 2 databases on the Azure SQL Managed Instance – [DefaultSpeedDB] the database with all the defaults and [OptimizedDB] the database that we shall resize to have 5GB for both data and log files, setting the autogrowth for the data file to 2GB and the autogrowth for the log file to 1 GB. We are running this test on a General Purpose service tier with 4 CPU vCore Azure SQL MI and 1 TB of the reserved space. Given that both their files are under 127 GB, there should not be any performance advantages for neither of them.

USE master;

CREATE DATABASE DefaultSpeedDB;

CREATE DATABASE OptimizedDB;

ALTER DATABASE [OptimizedDB] MODIFY FILE ( NAME = N'data_0', SIZE = 5GB , FILEGROWTH = 2GB );

ALTER DATABASE [OptimizedDB] MODIFY FILE ( NAME = N'log', SIZE = 5GB , FILEGROWTH = 1GB );

To verify the current settings, let’s execute the following query against the sys.master_files system catalog view:

SELECT DB_NAME(database_id) as DbName,

type,

type_desc,

name,

CAST( size*8./1024/1024 as DECIMAL(9,2)) AS SizeInGB,

CASE WHEN mf.is_percent_growth = 1 THEN growth ELSE 0 END as PercentGrowth,

CASE WHEN growth = 0 OR mf.is_percent_growth = 1 THEN NULL ELSE CAST(growth * 8. / 1024 / 1024 AS decimal(9,3)) END as FileGrowthGB

FROM sys.master_files mf

WHERE DB_NAME(database_id) IN ('DefaultSpeedDB','OptimizedDB') AND file_id < 65530;

The results are shown below, and you can observe that we just set the [OptimizedDB] database files to 5 GB and the [DefaultSpeedDB] database is keeping the default values from an unchanged [model] database with the default growth of 16 MB:

The test itself is simple, we shall load 10 million rows of very similar values into a test table, representing a little bit over 5 GB of occupied space, thus making both databases activating their autogrowth configurations, while comparing the time spent on growing data and log files.

USE DefaultSpeedDB;

DROP TABLE IF EXISTS dbo.TestSource;

CREATE TABLE dbo.TestSource(

id int not null,

name char (200),

lastname char(200),

logDate datetime );

-- Loading Rows

set nocount on

declare @i as int;

set @i = 1;

begin tran

while @i <= 10000000

begin

insert into dbo.TestSource

values (@i, 'First Name', 'LastName', GetDate());

set @i = @i + 1;

end;

commit

USE [OptimizedDB];

DROP TABLE IF EXISTS dbo.TestSource;

CREATE TABLE dbo.TestSource(

id int not null,

name char (200),

lastname char(200),

logDate datetime );

-- Loading Rows

set nocount on

declare @i as int;

set @i = 1;

begin tran

while @i <= 10000000

begin

insert into dbo.TestSource

values (@i, 'First Name', 'LastName', GetDate());

set @i = @i + 1;

end;

commit

We shall not be focusing on the overall performance, but rather focusing on the autogrowth events. Those events and their respective duration can be found in the default trace, and for this purpose let’s find those events by listing and counting them.

Notice that you will need to run the respective workload for each of the test databases individually within their scope by selecting and running this script against them. In the script below we are considering just the events that took in the previous 30 minutes, so be sure to run them right after the execution of the above data insertion script.

DECLARE @filename NVARCHAR(1000)

SELECT @filename = CAST(value AS NVARCHAR(1000))

FROM sys.fn_trace_getinfo(DEFAULT)

WHERE traceid = 1 AND property = 2

-- Split filename into parts in order to get rid of the rollover number

DECLARE @bc INT,

@ec INT,

@bfn VARCHAR(1000),

@efn VARCHAR(10)

SET @filename = REVERSE(@filename)

SET @bc = CHARINDEX('.',@filename)

SET @ec = CHARINDEX('_',@filename)+1

SET @efn = REVERSE(SUBSTRING(@filename,1,@bc))

SET @bfn = REVERSE(SUBSTRING(@filename,@ec,LEN(@filename)))

-- Set filename without rollover number

SET @filename = @bfn + @efn

SELECT ftg.StartTime,

te.name as EventName,

DB_NAME(ftg.databaseid) as DatabaseName,

ftg.[Filename] as FileName,

(ftg.IntegerData*8)/1024.0 as GrowthMB,

(ftg.duration)/1000000.0 as Duration_Secs

FROM fn_trace_gettable(@filename, DEFAULT) AS ftg

INNER JOIN sys.trace_events AS te

ON ftg.EventClass = te.trace_event_id

WHERE (ftg.EventClass = 92 OR ftg.EventClass = 93)

AND DatabaseID = DB_ID()

AND ftg.StartTime > DATEADD(MINUTE, -10, GETDATE())

SELECT te.name as EventName,

DB_NAME(ftg.databaseid) as DatabaseName,

SUM( (ftg.IntegerData*8)/1024.0 ) as GrowthMB,

SUM( (ftg.duration)/1000000.0 ) as Duration_Secs

FROM fn_trace_gettable(@filename, DEFAULT) AS ftg

INNER JOIN sys.trace_events AS te

ON ftg.EventClass = te.trace_event_id

WHERE (ftg.EventClass = 92 OR ftg.EventClass = 93)

AND DatabaseID = DB_ID()

AND ftg.StartTime > DATEADD(MINUTE, -30, GETDATE())

GROUP BY te.name,

DB_NAME(ftg.databaseid);

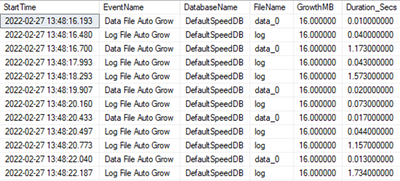

On the image below this text you can see some of the output for the [DefaultSpeedDB] database with over 500 distinct events – showing that both data and log would grow by the default 16 MB with different overall performance:

The output of the second query will show total growth – 5.2GB for the data file and almost 4 GB for the log file, representing 7 and 27 seconds respectively. Just for the reference, the overall performance of the query is around 7 minutes, meaning that whooping 8% of the execution time was spent on something that should have been controlled right from the beginning.

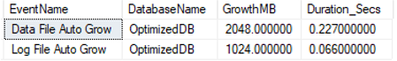

Running the same workload analysis against the [OptimizedDB] database produces quite different results with just 1 autogrow events for each data and log files and the overall duration is around 0.3 seconds instead of the 35+ seconds that we have observed in the non-optimized database case.

Below you can see the difference between the time spent on adjusting the database file sizes, where Optimized DB are almost invisible, and as a matter of a fact – increasing the initial size to 6 GB would address even that tiny time spent preventing workload to advance.

In this post you have learned some of the reasons behind configuring your database file sizes and seen how big a difference between a relatively well configured database and a default configured database can perform for some workloads. Even though Azure SQL Managed Instance is delivered to customers with capabilities of a Platform as a Service (PaaS), the responsibility & the power of decision for managing user database files is given to customer – and it should not be wasted.

Posted at https://sl.advdat.com/3Kc0v4qhttps://sl.advdat.com/3Kc0v4q