Many manufacturing industries, particularly those involving precision products, are constantly looking for ways to improve both manufacturing efficiency and worker safety. With increased use of automation in these industries, access to sites is often limited to specially equipped and trained crews leading to significant increase in operational expenses. Monitoring of the sites that can provide additional information on failures, impending or otherwise, can help reduce:

- The number of incidents needing maintenance thus leading to better uptime

- Down time given the ability to identify up front the necessary tools, procedures, and spare parts from the monitoring information

- Time spent by work crews at the site thus reducing risk and improving work safety

Kyndryl participated in Azure Percept Bootcamp and our solution monitors for defective components using Azure Percept. The solution monitors defective components that are discarded to specific trays/containers either from the assembly process or from the automated tools themselves and sends the types of components detected and their counts to analytical tools and dashboards. Azure Percept, with its ability to run AI models at the edge, the tooling available to train the models, and the integration with powerful backend analytical tools, enabled the rapid build of cost-effective and flexible monitoring capabilities.

This blog explains our solution and how Azure Percept can be used in a manufacturing scenario: Monitoring of Defective Components.

Overview of the Solution

The solution we developed using Azure Percept is Monitoring of Defective Components Solution. A brief description of the solution can be found in the following YouTube video: https://youtu.be/x8-JO7mGnhY.

Below is a diagram for the architecture of our Manufacturing Solution: Monitoring of Defective Components with Azure Percept:

We leverage the following Azure components in our solution:

- Azure Percept DK (https://docs.microsoft.com/en-us/azure/azure-percept/overview-azure-percept-dk) is an edge AI development kit designed for developing vision and audio AI solutions with Azure Percept Studio. For the purpose of this solution, it hosts two device application modules –

- Custom Vision AI Eye Module – receives images from the Percept DK camera, applies the custom training deployed and generates an inferencing data stream.

- Custom Component Count Module – custom developed for this solution. Processes the raw data received from the Eye Module, aggregates the count for each type of component detected and sends the result to IoT Hub along with a timestamp.

- VLC Media Player (https://www.videolan.org/) shows the live images along with inference data output by the Custom Vision AI Eye Module. A Web browser running against the Webstream Module could also be used.

- Azure Percept Studio (https://docs.microsoft.com/en-us/azure/azure-percept/overview-azure-percept-studio) is the single launch point for creating edge AI models and solutions. Azure Percept Studio allows you to discover and complete guided workflows that make it easy to integrate edge AI-capable hardware and powerful Azure AI and IoT cloud services.

- Azure IoT Hub (https://azure.microsoft.com/en-us/services/iot-hub/) acts as the cloud gateway, ingesting device telemetry at-scale. IoT Hub also supports bi-directional communication back to devices, allowing actions to be sent from the cloud to the device.

- Azure Stream Analytics (https://azure.microsoft.com/en-us/services/stream-analytics/) is an easy-to-use, real-time analytics service that is designed for mission-critical workloads. It enables creation of an end-to-end serverless streaming pipeline with just a few clicks. For the current solution it performs a simple transformation – the timestamp received in each sample is converted to DateTime format.

- Azure SQL Database (https://azure.microsoft.com/en-us/products/azure-sql/database/) is the relational database for transactional and other non-IoT data. For this solution, it holds the telemetry data for potential data warehouse use.

- Power BI (https://powerbi.microsoft.com/) is a suite of business analytics tools to analyze data and share insights. In this solution it displays a simple visualization of the telemetry data stored in Azure SQL Database.

- Azure IoT Explorer (https://docs.microsoft.com/en-us/azure/iot-fundamentals/howto-use-iot-explorer) is a graphical tool for interacting with and devices connected to the IoT Hub. In this solution it is used to display the telemetry data as it passes through the IoT Hub.

Solution Implementation

The implementation consists of deploying and configuring the above-listed components as described in the following steps -

- Azure Percept DK is set up with instances of IoT Hub and Percept Studio in Azure cloud.

- The Custom Vision AI Eye Module of Azure Percept DK is used to capture images of components.

- These images of components are uploaded to Azure Percept Studio.

- In the Custom Vision AI tool within Azure Percept Studio, the components are tagged along with their bounding boxes.

- A custom object detection model is trained using these tagged images.

- This trained model with acceptable performance is then deployed to the Azure Percept DK device.

- A customized component count module is created and deployed to the Azure Percept DK device.

- A VLC Media Player instance is created and configured to display the RTSP stream generated by Custom Vision AI Eye Module.

- An Azure SQL Database instance is created with a table having a schema aligned with the telemetry data.

- An Azure Stream Analytics job is created that reads data from IoT Hub, transforms the timestamp to DateTime format and sends the transformed data to Azure SQL Database.

- A Power BI instance is created that reads data from the Azure SQL and performs simple visualization.

- An Azure IoT Explorer instance is created and connected as a service to the IoT Hub.

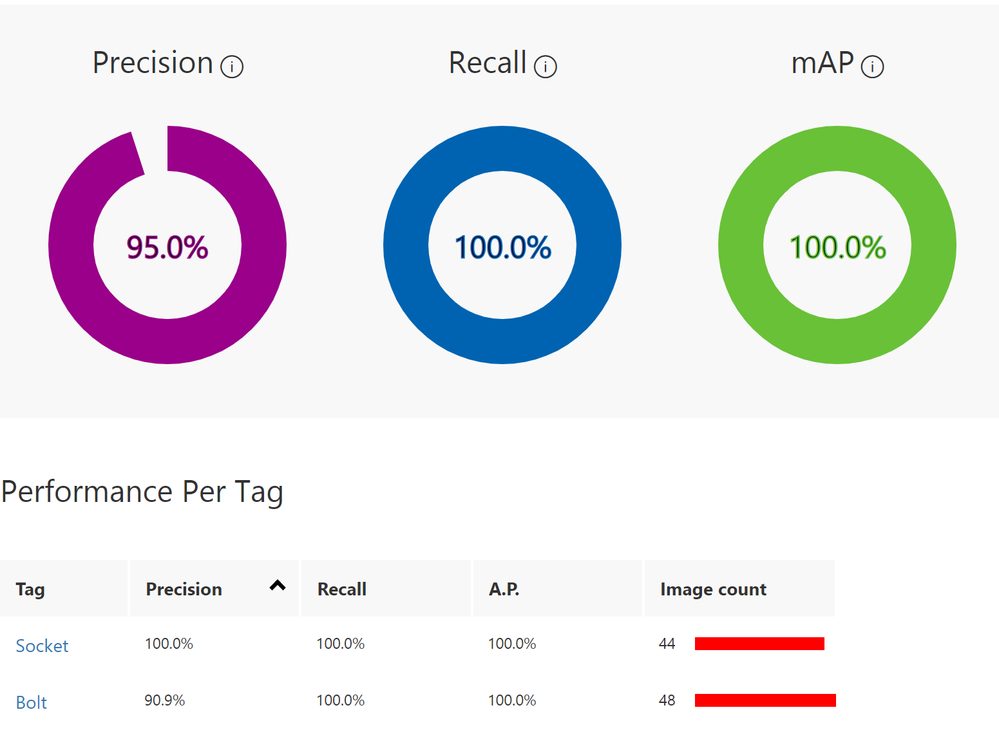

For the current solution, sockets from a mechanical toolset and bolts were used as components to train the object detection model. Two iterations were run:

- First Iteration

The first was with 30+ images, the General domain and a training budget of 4 hours that resulted in the following performance metrics

- Second Iteration

The second was with 40+ images, the General (A1) domain and a training budget of 24 hours that resulted in the following performance metrics

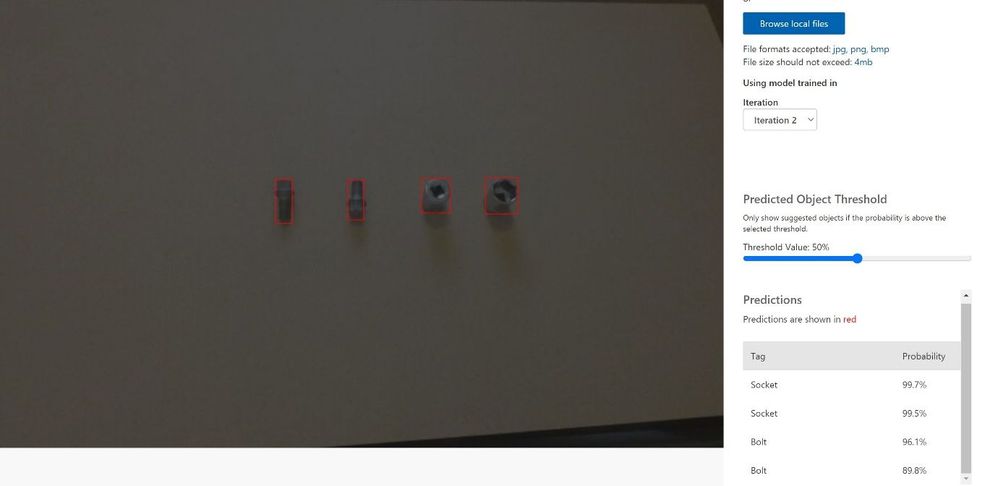

The second iteration gave much better accuracy and was deployed for the solution. The results of a test run with this iteration is below. As can be seen, the different components are recognized with good accuracy.

Data Flow for Solution Execution

- The Azure Eye module takes the model inferencing results of the real time camera feed and sends to component count module.

- The VLC Media Player displays the same feed from the Eye Module.

- Component count module sends the count of components and associated timestamp in real time as telemetry data to Azure IoT Hub.

- The IoT Explorer displays the telemetry data as received at the IoT Hub.

- The Azure Stream Analytics job takes as input the Azure IoT Hub data, transforms and outputs it to an Azure SQL database instance.

- The Power BI instance dashboard takes input from the Azure SQL database instance and generates a time series showing the number of different components at different timestamps.

A capture of the VLC Media Player output is below showing the components being detected correctly

Conclusions

By deploying a customized object detection model on Azure Percept, we were able to correctly recognize different non-standard components. With the help of an in-house developed Component Count module, the telemetry data flow was modified, and the POWER BI dashboard was populated accordingly. Thus, the solution was effective in replicating a real time manufacturing scenario and providing an effective solution.

The ease with which we were able to create a sophisticated monitoring tool excites and motivates us to further explore the capabilities of Percept DK in the context of other use cases and to create applications that will enable our customers in diverse verticals to successfully transform their businesses and operations.

Resources for learning more about Azure Percept

- The cutting edge of AI: Discover the possibilities with Azure Percept

- Azure Percept | Edge Computing Solution | Microsoft Azure

- Explore pre-built AI models

- Azure Percept product overview

- Azure Percept videos

Posted at https://sl.advdat.com/3KJ4vKghttps://sl.advdat.com/3KJ4vKg