One of the core scenarios we help developers deliver is virtual visits, when a business hosting a meeting invites an external customer to a video call. Virtual visits occur across industries: a virtual medical appointment (tele-health), a meeting about a mortgage application (tele-banking), or even a virtual courtroom.

Azure Communication Services supports Web, native mobile and service applications, so you can reach more customers on any device or platform. But Web applications are especially popular for virtual visit end-user experiences. When well implemented, Web applications allow users on any device to join a communication experience simply using a hyperlink, without installing an app.

You can take advantage of Azure’s open-source UI SDK to build mobile-friendly Web experiences incredibly quickly. When you use the UI SDK, building an app is very easy and you can still customize appearance and theming significantly.

But some developers may want to take advantage of the underlying Azure Communication Services Web Calling SDK, most often because you may want to customize the user experience beyond the scope of UI SDK controls. We’ve wrote this post as a checklist helping advanced developers deliver key user experiences with the low-level Calling SDK.

- Request access to a user's audio and video devices

- Continue the call even when the browser is in the background

- Handle UI changes to layout, like when screen-sharing is enabled

- Handle changes to a call’s state, such as being put on hold

- Handle changes to a user’s devices, such as when a headset is turned off

All of this material is covered in our Calling How To guide. If you run into trouble or have any feedback please contact our team through Azure Q&A or Azure Support.

Permissions

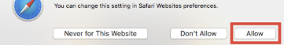

A web application must acquire access to the user's microphone and camera in order to participate in a voice or video call. Most browsers and operating systems protect users by requiring apps to request access to these devices explicitly and launch standarized UI elements. Below are some examples of these permission flows:

In some cases users my not see these pop ups if their permissions are blocked persistently. This happens most commonly when an end-user declines an access request once (perhaps accidently) and selects never for this website. Or if the user has configured the operating system to restrict device access for the browser wholesale. Below is a screenshot of the Mac OS setting controlling browser access.

It’s crucial for the application to make sure users have permissions for the microphone and camera and walk them through the permissions process. Else the user will not be heard or seen!

Recommended code flow

const callClient = new CallClient();

const callAgent = await callClient.createCallAgent(tokenCredential,{...});

const deviceManager = await callClient.getDeviceManager();

const permissions =await deviceManager.askDevicePermission({audio:true, video:true});

if(permissions.audio && permissions.video){

// allow calling

}else {

// show educational page to teach users how to grant/enable permissions based on OS/Browser.

}

Common mistakes

-

If Audio/Video permissions are not granted, do not allow the user to continue to the call. Instead give the user instruction on how to give permissions access with detail information based on the OS, version, browser, and permission status (e.g., deny forever).

-

When Audio/Video permissions are granted “too late”.

-

User shall grant permissions early enough not while the call is already in progress.

-

Permissions request will timeout after 20s, if user doesn’t grant permissions during that time, then permissions will be marked as declined even if user accepts them on later stage.

If mic permissions are not granted, and user ends up in the call, your app will observe an unexpected mute through the user facing diagnostic APIs.

- Apps should not use any 3rd party libraries that request device permissions, that can cause issues with ACS permissions and introduce regressions during the actual call. Make sure no mic, speaker volume indicators are used.

Recovery

There are two cases to explore:

Denied permissions on the browser level.

- If user is not in the call, ask them to refresh the browser and allow permissions.

- If user is in the call you can hang-up and refresh in code:

await callAgent.hangup();

window.location.reload();

Denied permissions on the OS level or Never for this website.

In this case refresh won’t fix the permissions, web application has to inform user how to enable permissions based on the OS and browser and then ask them to refresh page using the above instructions after the permissions are enabled.

Interruptions

During an active call, even if the application successfully got access to device’s mic and camera, at any point another application or the operating system can over those devices. We call this an interruption. For example, user is in an ACS call but answers a traditional phone call, suddenly the microphone access from the web page is going to the phone application on the phone. There are many types of interruptions including:

- PSTN Call: will take over the microphone.

- Facetime Call on iOS: will take over the microphone and camera.

- Siri: will take over the microphone.

- Voice recorder: will take over the microphone.

- YouTube: Will take over the speakers and microphone.

Recommended code flow

- User is in an ACS call unmuted with audio and video on Safari on iOS.

- User receives a PSTN call and accepts or rejects the call.

- User navigates to ACS call and now the state is muted, and video is turned off.

- Application UI shall show the correct state on the buttons and possibly inform the user to re-enable them.

- User unmutes and starts video again.

- User is in the call unmuted with audio and video.

call.feature(Features.UserFacingDiagnostics).media.on('diagnosticChanged',(diagnosticInfo: MediaDiagnosticChangedEventArgs)

=>{

if (diagnosticInfo.valueType === 'DiagnosticQuality' && diagnosticInfo.value === DiagnosticQuality.Bad) {

if(diagnosticInfo.diagnostic.microphoneMuteUnexpectedly || diagnosticInfo.diagnostic.microphoneNotFunctioning) {

// Inform the user that microsphone either stopped working permamantly or temporality ask them to unmute

}

}

if (diagnosticInfo.valueType === 'DiagnosticQuality' && diagnosticInfo.value === DiagnosticQuality.Good) {

if(diagnosticInfo.diagnostic.microphoneMuteUnexpectedly || diagnosticInfo.diagnostic.microphoneNotFunctioning) {

// Inform the user that microphone recovered

}

}

});

Common mistakes

Appliactions should indicate that something interrupted the call flow, and the state is not the same as it was. Make sure the user is informed and call button state reflects reality.

Recovery

Guide the user to unmute and restart video after the interruption. You can verify that the recovery was successful by listening on the good user-facing diagnostics. Specifically on iOS, you app should inform the user to click unmute and start video to go back to full A/V ACS call. While on Android shall inform the user to start video only. Other platforms should have seamless recovery without any user action.

Background

On iOS and Android when there is an active call with audio and video and user moves the browser application to the background, audio will keep flowing automatically but outgoing video will stop automatically. This is happening for privacy reasons, and it’s controlled by the operating system.

Recommended code flow

Application shall inform the user when that happens, and camera button shall reflect the correct state.

call.feature(Features.UserFacingDiagnostics).media.on('diagnosticChanged',(diagnosticInfo: MediaDiagnosticChangedEventArgs)

=>{

if (diagnosticInfo.valueType === 'DiagnosticQuality' && diagnosticInfo.value === DiagnosticQuality.Bad) {

if(diagnosticInfo.diagnostic.cameraStoppedUnexpectedly ) {

// Inform the user that camera stopped working and inform them to start video again

}

}

if (diagnosticInfo.valueType === 'DiagnosticQuality' && diagnosticInfo.value === DiagnosticQuality.Good) {

if(diagnosticInfo.diagnostic.cameraStoppedUnexpectedly) {

// Inform the user that camera recovered

}

}

});

Common mistakes

A very common mistake is that application has custom state management, and that state is being update only on user action but ignores the unpredictable events like interruptions etc.

const startVideo = async () => void {

if (!!call.localVideoStreams.length) {

try{

await call.startVideo(localVideoStream);

} catch (error) {

console.log(error);

}

} else {

try{

await call.stopVideo(call.localVideoStreams[0]);

}catch(error) {

console.log(error);

}

}

};

Avoid maintaining the state on the UI level, rather ask SDK if user is muted or has video on, make sure you are subscribing correctly on all the edge cases and update the UI when needed.

To check if user is muted use:

call.isMuted;

Layout updates

Very often web applications during a call will change to different layouts, going from a grid layout with participants in the call to a screen-sharing layout, or one to one call. It is important to the end-user that you support these varied layouts dynamically.

Recommended code flow

Your app should monitor participants being added/removed and us that information to render and change what is are attached to the DOM. When elements on the screen that contain these views are re-created, make sure those views are part of the DOM:

// Whenever participants are added/removed or when layout will change application will have to re-create

// the DOM stream rendered or re-use/re-attach them properly after the layout update is done.

const render =(stream) => {

stream.on('isAvailableChanged' ,async() => {

// handle state changes

});

if (stream.isAvailable) {

try{

const renderer = new VideoStreamRenderer(stream);

const view = await renderer.createView();

// attach to the DOM, renderers can be re-used, but have to be re-attached

// after full layout changes or if the DOM elements will be re-created

videoContainer.appendChild(view.target);

} catch (error) {

}

}

};

call.on('remoteParticipantsUpdated',e => {

e.added.forEach(p => {

// subscribe to participant events e.g.

p.on('videoStreamsUpdated',(d) => {

d.added.forEach(stream => {

// render remote stream

render(stream);

});

e.removed.forEach(strem => {

// cleanup the renderers

cleanup(stream);

});

});

// render all the streams from the participant

p.videosStreams.forEach(stream => {

// create renderers for all streams

render(stream);

});

});

e.removed.forEach(p=> {

// unsubscribe and cleanup

cleanup(stream);

});

});

...

Common mistakes

A very common mistake is applications to create these elements once and never listen for changes. Either participant changes, stream changes or UI changes. That can cause issues with missing participants, missing camera or screen-sharing streams or missing everything.

Recovery

Make sure your app is subscribing to all the necessary APIs and update accordingly the DOM elements when needed.

Call actions

Many call related actions like start/stop video or mute/unmute are async operations. When happening repeatedly without any waiting for the action to complete can have unpredictable results especially if the application keeps the state on the UI level.

Recommended code flow

Check the SDK state if muted or not. Trigger and wait for the action to complete.

const toggleMute = async () => {

try{

if(!call.isMuted){

await call.mute();

} else {

await call.unmute();

}

}catch (e) {

console.log(e);

}

}

Common mistakes

A common mistake is using local state instead of SDK state to decide if the app should mute or unmute the call. This local state can be out of sync at any point because of unpredictable events e.g., interruptions and that will cause your toggle mute function to try to mute an already muted user.

Recovery

Protect your APIs from abuse, make sure application is waiting on each async callback and properly updating the state when needed.

Devices

Application shall handle device enumeration and be listening for devices changed and update them accordingly. Application shall allow users to select different devices than the defaults ones and pick different mic, camera or speaker when needed.

Recommended code flow

Applications shall allow users to select their preferred device, as long as listening for changes on the existing devices and select an alternative one if suddenly becomes unavailable.

deviceManager.on('videoDevicesUpdated',async e => {

e.added.forEach(addedCameraDevice => {

// camera added

});

e.removed.forEach(removedCameraDevice => {

// camera removed

});

// If the current camera being used is removed, pick a new random one

// If user is in the call replace the source of the camera removed to the random one if any.

await call.localVideoStreams[0]?.switchSource(videoDeviceInfo);

});

deviceManager.on('audioDevicesUpdated' , e =>{

e.added.forEach(addedAudioDevice => {

if(addedAudioDevice.deviceType === 'Speaker') {

// it is a speaker

}

if(addedAudioDevice.deviceType === 'Microphone') {

// it is a Mic

}

});

e.removed.forEach(removedAudioDevice => {

// audio device removed

if(removedAudioDevice.deviceType === 'Speaker') {

// it is a speaker

}

if(removedAudioDevice.deviceType === 'Microphone') {

// it is a Microphone

}

});

});

deviceManager.on('selectedSpeakerChanged',(e) => {

// update selected speaker device

});

deviceManager.on('selectedMicrophoneChanged',(e) => {

// update selected mic device

});

Common mistakes

A common mistake is when apps the default device without making it clear to the end-user they can select the device. Devices can break or stop working and not allowing the user to select another device or force update alternatives when something is not available anymore can cause issues during an active call.

Recovery

Application shall listen for device updates and switch sources accordingly when needed.

Call state

Calls have plenty of states that apps need to handle correctly.

// available call states

'None' | 'Connecting' | 'Ringing' | 'Connected' | 'LocalHold' | 'InLobby' | 'Disconnecting' | 'Disconnected' | 'EarlyMedia'

Recommended code flow

Based on the call states above application shall handle all of them and update the UI accordingly. Many actions shall be protected, like mute/unmute shall not be allowed if user’s call is in ringing state and shall be allowed only if it makes sense.

Another thing applications shall take care of is updating the other elements like ringing sound shall stop if call state is not ringing anymore.

const callStateChanged =() => {

switch ( call.state) {

case 'Connecting':

// render connecting screen

break;

case 'Connected':

// render connected screen

break;

default:

// no call

break;

}

}

call.on('stateChanged', callStateChanged);

callStateChanged();

Common mistakes

Applications don’t cover all states and very often presume that the states are sequential. Even though usually calls go from

None -> Connecting -> Connected -> Disconnecting -> Disconnected

Sometimes the transitions between the states are so fast that some of them mistakenly would look like they never happened, and applications won’t handle them at all.

Another common pitfall is applications to handle only the happy path and ignoring the rest, for example User A calls User B and User B accepts the call, what happens if User B rejects? How they UI shall inform the user on that?

Recovery

Apps should be able to handle multiple calls and call states. Nothing is blocking the user to have an active call and receive another; application shall be able to handle all these edge case listening for changes on existing and new calls.

callAgent.on('callsUpdated', e => {

e.added.forEach(call => {

// listen for state changed on these calls and update the UI accordingly

});

e.removed.forEach(call => {

// cleanup removed calls

});

});

callAgent.on('incomingCall', args => {

args.incomingCall.on('callEnded'.args => {

// display call ended message

});

});

Posted at https://sl.advdat.com/3DN9Bmshttps://sl.advdat.com/3DN9Bms